I have a lot to say about renormalization; if I wait until I’ve read everything I need to know about it, my essay will never be written; I’ll die first; there isn’t enough time.

Click this link and the one above to read what some experts argue is the why and how of renormalization. Do it after reading my essay, though.

There’s a problem inside the science of science; there always has been. Facts don’t match the mathematics of theories people invent to explain them. Math seems to remove important ambiguities that underlie all reality.

People noticed the problem as soon as they started doing science. The diameter of a circle and its circumference was never certain; not when Pythagoras studied it 2,500 years ago or now; the number π is the problem; it’s irrational, not a fraction; it’s a number with no end and no pattern — 3.14159…forever into infinity.

More confounding, π is a number which transcends all attempts by algebra to compute it. It is a transcendental number that lies on the crossroads of mathematics and physical reality — a mysterious number at the heart of creation because without it the diameters, surface areas, and volumes of spheres could not be calculated with arbitrary precision.

The diameter of a circle must be multiplied by π to calculate its circumference; and vice-versa. No one can ever know everything about a circle because the number π is uncertain, undecidable, and in truth unknowable.

Long ago people learned to use the fraction 22 / 7 or, for more accuracy, 355 / 113. These fractions gave the wrong value for π but they were easy to work with and close enough to do engineering problems.

Fast forward to Isaac Newton, the English astronomer and mathematician, who studied the motion of the planets. Newton published Philosophiæ Naturalis Principia Mathematica in 1687. I have a modern copy in my library. It’s filled with formulas and derivations. Not one of them works to explain the real world — not one.

Newton’s equation for gravity describes the interaction between two objects — the strength of attraction between Sun and Earth, for example, and the resulting motion of Earth. The problem is the Moon and Mars and Venus, and many other bodies, warp the space-time waters in the pool where Earth and Sun swim. No way exists to write a formula to determine the future of such a system.

In 1887 Henri Poincare and Heinrich Bruns proved that such formulas cannot be written. The three-body problem (or any N-body problem, for that matter) cannot be solved by a single equation. Fudge-factors must be introduced by hand, Richard Feynman once complained. Powerful computers combined with numerical methods seem to work well enough for some problems.

Perturbation theory was proposed and developed. It helped a lot. Space exploration depends on it. It’s not perfect, though. Sometimes another fudge factor called rectification is needed to update changes as a system evolves. When NASA lands probes on Mars, no one knows exactly where the crafts are located on its surface relative to any reference point on the Earth.

Science uses perturbation methods in quantum mechanics and astronomy to describe the motions of both the very small and the very large. A general method of perturbations can be described in mathematics.

Even when using the signals from constellations of six or more Global Positioning Systems (GPS) deployed in high earth-orbit by various countries, it’s not possible to know exactly where anything is. Beet farmers out west combine the GPS systems of at least two countries to hone the courses of their tractors and plows.

On a good day farmers can locate a row of beets to within an eighth of an inch. That’s plenty good, but the several GPS systems they depend on are fragile and cost billions per year. In beet farming, an eighth inch isn’t perfect, but it’s close enough.

Quantum physics is another frontier of knowledge that presents roadblocks to precision. Physicists have invented more excuses for why they can’t get anything exactly right than probably any other group of scientists. Quantum physics is about a hundred years old, but today the problems seem more insurmountable than ever.

Insurmountable?

Why?

Well, the interaction of sub-atomic particles with themselves combined with, I don’t know, their interactions with swarms of virtual particles might disrupt the expected correlations between theories and experimental results. The mismatches can be spectacular. They sometimes dwarf the N-body problems of astronomy.

Worse — there is the problem of scales. For one thing, electrical forces are a billion times a billion times a billion times a billion times stronger than gravitational forces at sub-atomic scales. Forces appear to manifest themselves according to the distances across which they interact. It’s odd.

Measuring the charge on electrons produces different results depending on their energy. High energy electrons interact strongly; low energy electrons, not so much. So again, how can experimental results lead to theories that are both accurate and predictive? Divergent amplitudes that lead to infinities aren’t helpful.

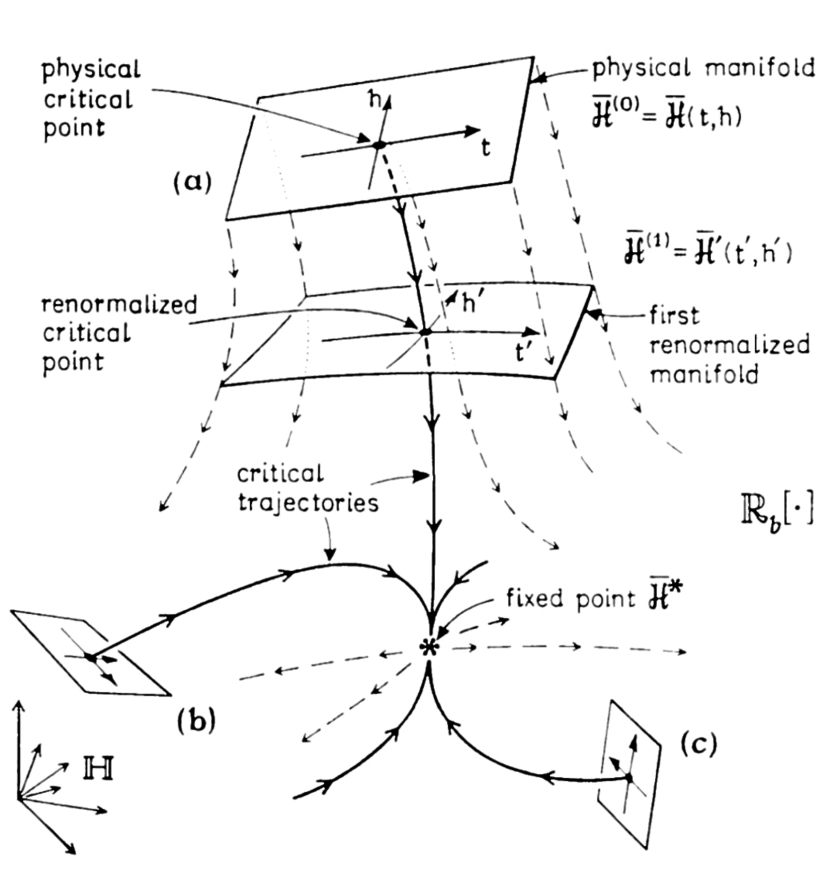

An infinity of scales pile up to produce troublesome infinities in the math, which tend to erode the predictive usefulness of formulas and diagrams. Once again, researchers are forced to fabricate fudge-factors. Renormalization is the buzzword for several popular methods.

Probably the best-known renormalization technique was described by Shinichiro Tomonaga in his 1965 Nobel Prize speech. According to the view of retired Harvard physicist Rodney Brooks, Tomonaga implied that …replacing the calculated values of mass and charge, infinite though they may be, with the experimental values… is the adjustment necessary to make things right, at least sometimes.

Isn’t such an approach akin to cheating? — at least to working theorists worth their salt? Well, maybe… but as far as I know results are all that matter. Truncation and faulty data mean that math can never match well with physical reality, anyway.

Folks who developed the theory of quantum electrodynamics (QED) used perturbation methods to bootstrap their ideas to useful explanations. Their work produced annoying infinities until they introduced creative renormalization techniques to chase them away.

At first physicists felt uncomfortable discarding the infinities that showed up in their equations; they hated introducing fudge-factors. Maybe they felt they were smearing theories with experimental results that weren’t necessarily accurate. Some may have thought that a poor match between math, theory, and experimental results meant something bad; they didn’t understand the hidden truth they struggled to lay bare.

Philosopher Robert Pirsig believed the number of possible explanations scientists could invent for phenomena were in fact unlimited. Despite all the math and convolutions of math, Pirsig believed something mysterious and intangible like quality or morality guided human understanding of the Cosmos. An infinity of notions he saw floating inside his mind drove him insane, at least in the years before he wrote his classic Zen and the Art of Motorcycle Maintenance.

The newest generation of scientists aren’t embarrassed by anomalies. They “shut up and calculate.” Digital somersaults executed to validate their work are impossible for average people to understand, much less perform. Researchers determine scales, introduce “cut-offs“, and extract the appropriate physics to make suitable matches of their math with experimental results. They put the horse before the cart more times than not, some observers might say.

Apologists say, no. Renormalization is simply a reshuffling of parameters in a theory to prevent its failure. Renormalization doesn’t sweep infinities under the rug; it is a set of techniques scientists use to make useful predictions in the face of divergences, infinities, and blowup of scales which might otherwise wreck progress in quantum physics, condensed matter physics, and even statistics. From YouTube video above.

It’s not always wise to question smart folks, but renormalization seems a bit desperate, at least to my way of thinking. Is there a better way?

The complexity of the language scientists use to understand and explain the world of the very small is a convincing clue that they could be missing pieces of puzzles, which might not be solvable by humans regardless how much IQ any petri-dish of gametes might deliver to brains of future scientists.

It’s possible that humans, who use language and mathematics to ponder and explain, are not properly hardwired to model complexities of the universe. Folks lack brainpower enough to create algorithms for ultimate understanding.

People are like the first Commodore 64 computers (remember?) who need upgrades to become more like Sunway TaihuLight or Cray XK7 Titan super-computers to have any chance at all.

Perhaps Elon Musk’s Neuralink add-ons will help someday.

The smartest thinkers — people like Nick Bostrom and Pedro Domingos (who wrote The Master Algorithm) — suggest artificial super-intelligence might be developed and hardwired with hundreds or thousands of levels — each loaded with trillions of parallel links — to digest all meta-data, books, videos, and internet information (a complete library of human knowledge) to train armies of computers to discover paths to knowledge unreachable by puny humanoid intelligence.

Super-intelligent computer systems might achieve understanding in days or weeks that all humans working together over millennia might never acquire. The risk of course is that such intelligence, when unleashed, might enslave us all.

Another downside might involve communication between humans and machines. Think of a father — a math professor — teaching calculus to the family cat. It’s hopeless, right?

The founder of Google and Alphabet Inc., Larry Page, who graduated from the same school as one of my sons, is perfecting artificial super-intelligence. He owns a piece of Tesla Motors, started by Elon Musk of SpaceX.

Imagine an expert in AI & quantum computation joining forces with billionaire Musk who possesses the rocket launching power of a country. Right now, neither is getting along, Elon said. They don’t speak. It could be a good thing, right?

What are the consequences?

Entrepreneurs don’t like to be regulated. Temptations unleashed by unregulated military power and AI attained science secrets falling into the hands of two men — nice men like Elon and Larry appear to be — might push humanity in time to unmitigated… what’s the word I’m looking for?

I heard Elon say he doesn’t like regulation, but he wants to be regulated. He believes super-intelligence will be civilization ending. He’s planning to put a colony on Mars to escape its power and ensure human survival.

Is Elon saying he doesn’t trust himself, that he doesn’t trust people he knows like Larry? Are these guys demanding governments save Earth from themselves?

I haven’t heard Larry ask for anything like that. He keeps a low profile. God bless him as he collects everything everyone says and does in cyber-space.

Think about it.

Think about what it means.

We have maybe ten years, tops; maybe less. Maybe it’s ten days. Maybe the worst has already happened, but no one said anything. Somebody, think of something — fast.

Who imagined that laissez-faire capitalism might someday spawn an airtight autocracy that enslaves the world?

Humans are wise to renormalize their aspirations — their civilizations — before infinities of misery wreck Earth and freeless futures emerge that no one wants.

Billy Lee